Table Of Content

- What are quasi-experimental research designs?

- Characterize fidelity and measures of implementation processes

- What are the characteristics of quasi-experimental designs?

- Turn Your Data Into Easy-To-Understand And Dynamic Stories

- Interrupted Time Series

- Unlock the potential of intuitive design, seamless project management, and unparalleled support.

- Types Of Quasi-Experimental Designs

Second, propensity score weighting (e.g. Morgan, 2018) can statistically mitigate internal validity concerns, although this approach may be of limited utility when comparing secular trends between different study cohorts (Dimick and Ryan, 2014). More broadly, qualitative methods (e.g. periodic interviews with staff at intervention and control sites) can help uncover key contextual factors that may be affecting study results above and beyond the intervention itself. We also note that there are a variety of Single Subject Experimental Designs (SSEDs; Byiers et al., 2012), including withdrawal designs and alternating treatment designs, that can be used in testing evidence-based practices. Similarly, an implementation strategy may be used to encourage the use of a specific treatment at a particular site, followed by that strategy’s withdrawal and subsequent reinstatement, with data collection throughout the process (on-off-on or ABA design).

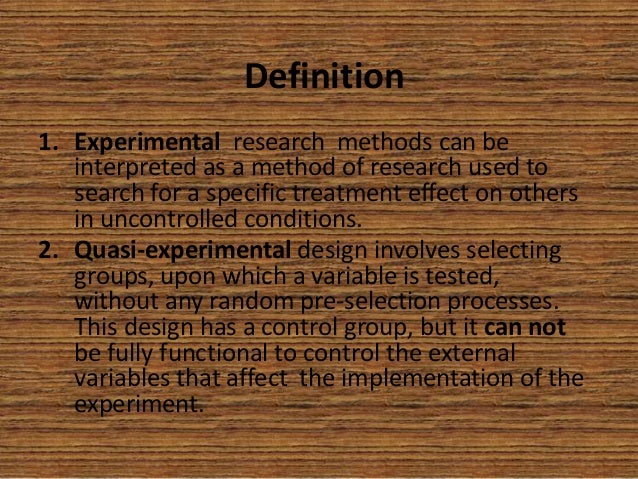

What are quasi-experimental research designs?

For example, a manufacturing company might measure its workers’ productivity each week for a year. In an interrupted time series-design, a time series like this one is “interrupted” by a treatment. In one classic example, the treatment was the reduction of the work shifts in a factory from 10 hours to 8 hours (Cook & Campbell, 1979)[5].

Characterize fidelity and measures of implementation processes

In an experiment with random assignment, study units have the same chance of being assigned to a given treatment condition. As such, random assignment ensures that both the experimental and control groups are equivalent. In a quasi-experimental design, assignment to a given treatment condition is based on something other than random assignment.

What are the characteristics of quasi-experimental designs?

Ethical considerations typically will not allow random withholding of an intervention with known efficacy. Thus, if the efficacy of an intervention has not been established, a randomized controlled trial is the design of choice to determine efficacy. But if the intervention under study incorporates an accepted, well-established therapeutic intervention, or if the intervention has either questionable efficacy or safety based on previously conducted studies, then the ethical issues of randomizing patients are sometimes raised. In the area of medical informatics, it is often believed prior to an implementation that an informatics intervention will likely be beneficial and thus medical informaticians and hospital administrators are often reluctant to randomize medical informatics interventions. In addition, there is often pressure to implement the intervention quickly because of its believed efficacy, thus not allowing researchers sufficient time to plan a randomized trial. We then performed a systematic review of four years of publications from two informatics journals.

Quasi-experimental designs encompass various approaches, including nonequivalent group designs, interrupted time series designs, and natural experiments. Each design offers unique advantages and limitations, providing researchers with versatile tools to explore causal relationships in different contexts. The authors did not account for other factors that could have affected the difference between the treatment and comparison groups, such as race/ethnicity. Also, the groups were significantly different in age, but the authors did not control for the differences in the analyses. These preexisting differences between the groups—and not the financial education program—could explain the observed differences in outcomes. Therefore, the study is not eligible for a moderate causal evidence rating, the highest rating available for nonexperimental designs.

Interrupted Time Series

External validity can be improved when the intervention is applied to entire communities, as with some of the community-randomized studies described in Table 2 (12, 21). In these cases, the results are closer to the conditions that would apply if the interventions were conducted ‘at scale’, with a large proportion of a population receiving the intervention. The study by Grant et al et al uses a variant of the SWD for which individuals within a setting are enumerated and then randomized to get the intervention. Individuals contributed follow-up time to the “pre-clinic” phase from the baseline date established for the cohort until the actual date of their first clinic visit, and also to the “post- clinic” phase thereafter.

Unlock the potential of intuitive design, seamless project management, and unparalleled support.

When a design using randomized locations is employed successfully, the locations may be different in other respects (confounding variables), and this further complicates the analysis and interpretation. Ethical concerns often arise in research when randomizing participants to different groups could potentially deny individuals access to beneficial treatments or interventions. In such cases, quasi-experimental designs provide an ethical alternative, allowing researchers to study the impact of interventions without depriving anyone of potential benefits. First, as mentioned briefly above, it is important to select a control group that is as similar as possible to the intervention site(s), which can include matching at both the health care network and clinic level (e.g. Kirchner et al., 2014).

Which quantitative data analysis tests should I use for ordinal data in quasi-experimental design? - ResearchGate

Which quantitative data analysis tests should I use for ordinal data in quasi-experimental design?.

Posted: Fri, 17 Apr 2015 07:00:00 GMT [source]

Furthermore, such allocation schemes typically require analytic models that account for this clustering and the resulting correlations among error structures (e.g., generalized estimating equations [GEE] or mixed-effects models; Schildcrout et al., 2018). In a pretest-posttest design, the dependent variable is measured once before the treatment is implemented and once after it is implemented. This would be a nonequivalent groups design because the students are not randomly assigned to classes by the researcher, which means there could be important differences between them. Because they allow better control for confounding variables than other forms of studies, they have higher external validity than most genuine experiments and higher internal validity (less than true experiments) than other non-experimental research.

ADEPT was a clustered SMART (NeCamp et al., 2017) designed to inform an adaptive sequence of implementation strategies for implementing an evidence-based collaborative chronic care model, Life Goals (Kilbourne et al., 2014c; Kilbourne et al., 2012a), into community-based practices. In order to enhance the causal inference for pre-post designs with non-equivalent control groups, the best strategies improve the comparability of the control group with regards to potential covariates related to the outcome of interest but are not under investigation. One strategy involves creating a cohort, and then using targeted sampling to inform matching of individuals within the cohort.

When you wish to explain any complex data, it’s always advised to break it down into simpler visuals or stories. It is a platform that helps researchers and scientists to turn their data into easy-to-understand and dynamic stories, helping the audience understand the concepts better. However, because they couldn’t afford to pay everyone who qualified for the program, they had to use a random lottery to distribute slots.

Non-equivalent group designs, pretest-posttest designs, and regression discontinuity designs are only a few of the essential types. An interrupted time series (ITS) design involves collection of outcome data at multiple time points before and after an intervention is introduced at a given point in time at one or more sites (6, 13). The pre-intervention outcome data is used to establish an underlying trend that is assumed to continue unchanged in the absence of the intervention under study (i.e., the counterfactual scenario). Any change in outcome level or trend from the counter-factual scenario in the post-intervention period is then attributed to the impact of the intervention.

Traditional RCTs strongly prioritize internal validity over external validity by employing strict eligibility criteria and rigorous data collection methods. The prefix quasi means “resembling.” Thus quasi-experimental research is research that resembles experimental research but is not true experimental research. But because participants are not randomly assigned—making it likely that there are other differences between conditions—quasi-experimental research does not eliminate the problem of confounding variables.

SMARTs are multistage randomized trials in which some or all participants are randomized more than once, often based on ongoing information (e.g., treatment response). In implementation research, SMARTs can inform optimal sequences of implementation strategies to maximize downstream clinical outcomes. Thus, such designs are well-suited to answering questions about what implementation strategies should be used, in what order, to achieve the best outcomes in a given context. A quasi-experimental design is used when it's not logistically feasible or ethical to conduct randomized, controlled trials.

Quasi-experiments are studies that aim to evaluate interventions but that do not use randomization. Similar to randomized trials, quasi-experiments aim to demonstrate causality between an intervention and an outcome. Quasi-experimental studies can use both preintervention and postintervention measurements as well as nonrandomly selected control groups.

No comments:

Post a Comment